Functions[]

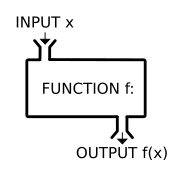

A function f is like a "black box" that takes an input x, and returns a single corresponding output f(x).

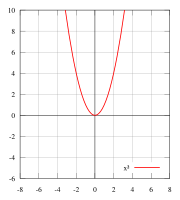

The red curve is the graph of a function f in the Cartesian plane, consisting of all points with coordinates of the form (x, f(x)). The property of having one output for each input is represented geometrically by the fact that each vertical line (such as the yellow line through the origin) has exactly one crossing point with the curve.

From Wikipedia:Function (mathematics)

In mathematics, a function is a *relation between a *set of inputs and a set of permissible outputs with the property that each input is related to exactly one output. An example is the function that relates each *real number x to its square x2. The output of a function f corresponding to an input x is denoted by f(x) (read "f of x"). In this example, if the input is −3, then the output is 9, and we may write f(−3) = 9. See Tutorial:Evaluate by Substitution. Likewise, if the input is 3, then the output is also 9, and we may write f(3) = 9. (The same output may be produced by more than one input, but each input gives only one output.) The input *variable(s) are sometimes referred to as the argument(s) of the function.

Euclids "common notions"[]

Things that do not differ from one another are equal to one another

| a=a |

Things that are equal to the same thing are also equal to one another

| If |

|

then a=c |

If equals are added to equals, then the wholes are equal

| If |

|

then a+c=b+d |

If equals are subtracted from equals, then the remainders are equal

| If |

|

then a-c=b-d |

The whole is greater than the part.

| If | b≠0 | then a+b>a |

Elementary algebra[]

ƒ(x) = x2 is an example of an even function.

Elementary algebra builds on and extends arithmetic by introducing letters called *variables to represent general (non-specified) numbers.

Algebraic expressions may be evaluated and simplified, based on the basic properties of arithmetic operations (addition, subtraction, multiplication, division and exponentiation). For example,

- Added terms are simplified using coefficients. For example, can be simplified as (where 3 is a numerical coefficient).

- Multiplied terms are simplified using exponents. For example, is represented as

- Like terms are added together,[1] for example, is written as , because the terms containing are added together, and, the terms containing are added together.

- Brackets can be "multiplied out", using the distributive property. For example, can be written as which can be written as

- Expressions can be factored. For example, , by dividing both terms by can be written as

ƒ(x) = x3 is an example of an odd function.

For any function , if then:

One must be careful though when squaring both sides of an equation since this can result is solutions that dont satisfy the original equation.

- yet

A function is an even function if f(x) = f(-x)

A function is an odd function if f(x) = -f(-x)

Trigonometry[]

The law of cosines reduces to the Pythagorean theorem when gamma=90 degrees

The law of sines (also known as the "sine rule") for an arbitrary triangle states:

where is the area of the triangle

The law of tangents:

The parallelogram law reduces to the Pythagorean theorem when the parallelogram is a rectangle

Right triangles[]

A right triangle is a triangle with gamma=90 degrees.

For small values of x, sin x ≈ x. (If x is in radians).

|

SOH → sin = "opposite" / "hypotenuse" CAH → cos = "adjacent" / "hypotenuse" TOA → tan = "opposite" / "adjacent" |

= sin A = a/c = cos A = b/c = tan A = a/b |

(Note: the expression of tan(x) has i in the numerator, not in the denominator, because the order of the terms (and thus the sign) of the numerator is changed w.r.t. the expression of sin(x).)

Hyperbolic functions[]

- See also: *Hyperbolic angle

A ray through the *unit hyperbola in the point where is twice the area between the ray, the hyperbola, and the -axis. For points on the hyperbola below the -axis, the area is considered negative (see animated version with comparison with the trigonometric (circular) functions).

Circle and hyperbola tangent at (1,1) display geometry of circular functions in terms of *circular sector area u and hyperbolic functions depending on *hyperbolic sector area u.

Hyperbolic functions are analogs of the ordinary trigonometric, or circular, functions.

- Hyperbolic sine:

- Hyperbolic cosine:

- Hyperbolic tangent:

- Hyperbolic cotangent:

- Hyperbolic secant:

- Hyperbolic cosecant:

Areas and volumes[]

The length of the circumference C of a circle is related to the radius r and diameter d by:

- where

- = 3.141592654

- = 2 * π

The area of a circle is:

The surface area of a sphere is

- The surface area of a sphere 1 unit in radius is:

- The surface area of a sphere 128 units in radius is:

The volume inside a sphere is

- The volume of a sphere 1 unit in radius is:

The moment of inertia of a hollow sphere is:

Moment of inertia of a sphere is:

The area of a hexagon is:

- where a is the length of any side.

Fractals[]

A square that is twice as big is four times as massive because it is 2 dimensional (22 = 4). A cube that is twice as big is eight times as massive because it is 3 dimensional (23 = 8).

A triangle that is twice as big is four times as massive.

But a Sierpiński triangle that is twice as big is exactly three times as massive. It therefore has a Hausdorff dimension of 1.5849. (21.5849 = 3)

A pyramid that is twice as big is eight times as massive.

But a Sierpiński pyramid that is twice as big is exactly five times as massive. It therefore has a Hausdorff dimension of 2.3219 (22.3219 = 5)

Polynomials[]

- See also: *Runge's phenomenon, *Polynomial ring, *System of polynomial equations, *Rational root theorem, *Descartes' rule of signs, and *Complex conjugate root theorem

- From Wikipedia:Polynomial:

A polynomial can always be written in the form

- where are constants called coefficients and n is the degree of the polynomial.

- A *linear polynomial is a polynomial of degree one.

- Each individual *term is the product of the *coefficient and a variable raised to a nonnegative integer power.

- A *monomial has only one term.

- A *binomial has 2 terms.

*Fundamental theorem of algebra:

- Every single-variable, degree n polynomial with complex coefficients has exactly n complex roots.

- However, some or even all of the roots might be the same number.

- A root (or zero) of a function is a value of x for which Z(x)=0.

- If then z2 is a root of *multiplicity k.[2] z2 is a root of multiplicity k-1 of the derivative (Derivative is defined below) of Z(x).

- If k=1 then z2 is a simple root.

- The graph is tangent to the x axis at the multiple roots of f and not tangent at the simple roots.

- The graph crosses the x-axis at roots of odd multiplicity and bounces off (not goes through) the x-axis at roots of even multiplicity.

- Near x=z2 the graph has the same general shape as

- The *complex conjugate root theorem states that if the coefficients of a polynomial are real, then the non-real roots appear in pairs of the form (a + ib, a – ib).

- The roots of the formula are given by the Quadratic formula:

- is called the discriminant.

- This is a parabola shifted to the right h units, stretched by a factor of a, and moved upward k units.

- k is the value at x=h and is either the maximum or the minimum value.

- The roots of

- are the multiplicative inverses of

- There is no formula for the roots of a fifth (or higher) degree polynomial equation in terms of the coefficients of the polynomial, using only the usual algebraic operations (addition, subtraction, multiplication, division) and application of radicals (square roots, cube roots, etc). See *Galois theory.

- Where See Binomial coefficient

- Isaac Newton generalized the binomial theorem to allow real exponents other than nonnegative integers. (The same generalization also applies to complex exponents.) In this generalization, the finite sum is replaced by an infinite series.[3]

The polynomial remainder theorem states that the remainder of the division of a polynomial Z(x) by the linear polynomial x-a is equal to Z(a). See *Ruffini's rule.

Determining the value at Z(a) is sometimes easier if we use *Horner's method (*synthetic division) by writing the polynomial in the form

A *monic polynomial is a one variable polynomial in which the leading coefficient is equal to 1.

Rational functions[]

A *rational function is a function of the form

It has n zeros and m poles. A pole is a value of x for which |f(x)| = infinity.

- The vertical asymptotes are the poles of the rational function.

- If n<m then f(x) has a horizontal asymptote at the x axis

- If n=m then f(x) has a horizontal asymptote at k.

- If n>m then f(x) has no horizontal asymptote.

- Given two polynomials and , where the pi are distinct constants and deg Z < m, partial fractions are generally obtained by supposing that

- and solving for the ci constants, by substitution, by *equating the coefficients of terms involving the powers of x, or otherwise.

- (This is a variant of the *method of undetermined coefficients.)[4]

- If the degree of Z is not less than m then use long division to divide P into Z. The remainder then replaces Z in the equation above and one proceeds as before.

- If then

A *Generalized hypergeometric series is given by

- where c0=1 and

The function f(x) has n zeros and m poles.

- *Basic hypergeometric series, or hypergeometric q-series, are *q-analogue generalizations of generalized hypergeometric series.[5]

- We define the q-analog of n, also known as the q-bracket or q-number of n, to be

- one may define the q-analog of the factorial, known as the *q-factorial, by

- *Elliptic hypergeometric series are generalizations of basic hypergeometric series.

- An elliptic function is a meromorphic function that is periodic in two directions.

A *generalized hypergeometric function is given by

So for ex (see below) we have:

Integration and differentiation[]

Force • distance = energy

- See also: Hyperreal number and Implicit differentiation

The integral is a generalization of multiplication.

- For example: a unit mass dropped from point x2 to point x1 will release energy.

- The usual equation is is a simple multiplication:

- But that equation cant be used if the strength of gravity is itself a function of x.

- The strength of gravity at x1 would be different than it is at x2.

- And in reality gravity really does depend on x (x is the distance from the center of the earth):

- (See inverse-square law.)

- However, the corresponding Definite integral is easily solved:

The surprisingly simple rules for solving definite integrals F(x) is called the indefinite integral. (antiderivative)

k and y are arbitrary constants:

(Units (feet, mm...) behave exactly like constants.)

And most conveniently :

- The integral of a function is equal to the area under the curve.

- When the "curve" is a constant (in other words, k•x0) then the integral reduces to ordinary multiplication.

The derivative is a generalization of division.

The derivative of the integral of f(x) is just f(x).

The derivative of a function at any point is equal to the slope of the function at that point.

The equation of the line tangent to a function at point a is

The Lipschitz constant of a function is a real number for which the absolute value of the slope of the function at every point is not greater than this real number.

The derivative of f(x) where f(x) = k•xy is

- The derivative of a is

- The integral of is ln(x)[7]. See natural log

Chain rule for the derivative of a function of a function:

The Chain rule for a function of 2 functions:

- (See "partial derivatives" below)

The Product rule can be considered a special case of the chain rule for several variables[8]

- (because is negligible)

By the chain rule:

Therefore the Quotient rule:

There is a chain rule for integration but the inner function must have the form so that its derivative and therefore

Actually the inner function can have the form so that its derivative and therefore provided that all factors involving x cancel out.

The product rule for integration is called Integration by parts

One can use partial fractions or even the Taylor series to convert difficult integrals into a more manageable form.

The fundamental theorem of Calculus is:

The fundamental theorem of calculus is just the particular case of the *Leibniz integral rule:

In calculus, a function f defined on a subset of the real numbers with real values is called *monotonic if and only if it is either entirely non-increasing, or entirely non-decreasing.[9]

A differential form is a generalisation of the notion of a differential that is independent of the choice of *coordinate system. f(x,y) dx ∧ dy is a 2-form in 2 dimensions (an area element). The derivative operation on an n-form is an n+1-form; this operation is known as the exterior derivative. By the generalized Stokes' theorem, the integral of a function over the boundary of a manifold is equal to the integral of its exterior derivative on the manifold itself.

Taylor & Maclaurin series[]

If we know the value of a smooth function at x=0 (smooth means all its derivatives are continuous) and we also know the value of all of its derivatives at x=0 then we can determine the value at any other point x by using the Maclaurin series. ("!" means factorial)

The proof of this is actually quite simple. Plugging in a value of x=0 causes all terms but the first to become zero. So, assuming that such a function exists, a0 must be the value of the function at x=0. Simply differentiate both sides of the equation and repeat for the next term. And so on.

Because the functions can be multiplied by scalars and added they therefore form an infinite dimensional vector space. (An infinite dimensional space is not a *Compact space.) The function f(x) occupies a single point in that infinite dimensional space corresponding to a vector whose components are

The Taylor series generalizes the Maclaurin series.

Riemann surface for the function ƒ(z) = √z. For the imaginary part rotate 180°.

- An analytic function is a function whose Taylor series converges for every z0 in its domain; analytic functions are infinitely differentiable.

- Any vector g = (z0, α0, α1, ...) is a *germ if it represents a power series of an analytic function around z0 with some radius of convergence r > 0.

- The set of germs is a Riemann surface.

- Riemann surfaces are the objects on which multi-valued functions become single-valued.

- A *connected component of (i.e., an equivalence class) is called a *sheaf.

We can easily determine the Maclaurin series expansion of the exponential function because it is equal to its own derivative.[7]

- The above holds true even if x is a matrix. See *Matrix exponential

And likewise for cos(x) and sin(x) because cosine is the derivative of sine which is the derivative of -cosine

It then follows that and therefore See Euler's formula

- This makes the equation for a circle in the complex plane, and by extension sine and cosine, extremely simple and easy to work with especially with regard to differentiation and integration.

- For sine waves differentiation and integration are replaced with multiplication and division. Calculus is replaced with algebra. Therefore any expression that can be represented as a sum of sine waves can be easily differentiated or integrated.

Fourier Series[]

The Maclaurin series cant be used for a discontinuous function like a square wave because it is not differentiable. (*Distributions make it possible to differentiate functions whose derivatives do not exist in the classical sense. See *Generalized function.)

But remarkably we can use the Fourier series to expand it or any other periodic function into an infinite sum of sine waves each of which is fully differentiable!

A square wave consists of all odd frequencies with the amplitude of each frequency being .

A dirac comb consists of all integer frequencies with the amplitude of each frequency being 1.

sin2(x) = 0.5*cos(0x) - 0.5*cos(2x)

- The reason this works is because sine and cosine are *orthogonal functions.

- Two vectors are said to be orthogonal when:

- or more generally when:

- Two functions are said to be orthogonal when:

- That means that multiplying any 2 sine waves of frequency n and frequency m and integrating over one period will always equal zero unless n=m.

- See the graph of sin2(x) to the right.

- See *Amplitude_modulation

- And of course ∫ fn*(f1+f2+f3+...) = ∫ (fn*f1) + ∫ (fn*f2) + ∫ (fn*f3) +...

- The complex form of the Fourier series uses complex exponentials instead of sine and cosine and uses both positive and negative frequencies (clockwise and counter clockwise) whose imaginary parts cancel.

- The complex coefficients encode both amplitude and phase and are complex conjugates of each other.

- where the dot between x and ν indicates the inner product of Rn.

- A 2 dimensional Fourier series is used in video compression.

- A *discrete Fourier transform can be computed very efficiently by a *fast Fourier transform (FFT).

- The FFT has been described as "the most important numerical algorithm of our lifetime"

- In mathematical analysis, many generalizations of Fourier series have proven to be useful.

- They are all special cases of decompositions over an orthonormal basis of an inner product space.[10]

- *Spherical harmonics are a complete set of orthogonal functions on the sphere, and thus may be used to represent functions defined on the surface of a sphere, just as circular functions (sines and cosines) are used to represent functions on a circle via Fourier series.[11]

- Spherical harmonics are *basis functions for SO(3). See Laplace series.

- Every continuous function in the function space can be represented as a *linear combination of basis functions, just as every vector in a vector space can be represented as a linear combination of basis vectors.

- Every quadratic polynomial can be written as a1+bt+ct2, that is, as a linear combination of the basis functions 1, t, and t2.

Transforms[]

Fourier transforms generalize Fourier series to nonperiodic functions like a single pulse of a square wave.

The more localized in the time domain (the shorter the pulse) the more the Fourier transform is spread out across the frequency domain and vice versa, a phenomenon known as the uncertainty principle.

Using the Fourier transform we can determine that the Dirac delta function consists of all frequencies with the amplitude of each frequency being 1.

- Laplace transforms generalize Fourier transforms to complex frequency .

- Complex frequency includes a term corresponding to the amount of damping.

- , (assuming a > 0)

- The inverse Laplace transform is given by

- where the integration is done along the vertical line Re(s) = γ in the complex plane such that γ is greater than the real part of all *singularities of F(s) and F(s) is bounded on the line, for example if contour path is in the *region of convergence.

- If all singularities are in the left half-plane, or F(s) is an *entire function , then γ can be set to zero and the above inverse integral formula becomes identical to the *inverse Fourier transform.[12]

- The *Z-transform can be considered as a discrete-time equivalent of the Laplace transform. This similarity is explored in the theory of *time-scale calculus.[13]

- Integral transforms generalize Fourier transforms to other *kernals (besides sine and cosine)

- Cauchy kernel =

- Hilbert kernel =

- Poisson Kernel:

- For the ball of radius r, , in Rn, the Poisson kernel takes the form:

- where , (the surface of ), and is the *surface area of the unit n-sphere.

- unit disk (r=1) in the complex plane:[14]

- Dirichlet kernel

The *convolution theorem states that[15]

where denotes point-wise multiplication. It also works the other way around:

By applying the inverse Fourier transform , we can write:

and:

This theorem also holds for the Laplace transform.

The *Hilbert transform is a *multiplier operator. The multiplier of H is σH(ω) = −i sgn(ω) where sgn is the *signum function. Therefore:

where denotes the Fourier transform.

Since sgn(x) = sgn(2πx), it follows that this result applies to the three common definitions of .

By Euler's formula,

Therefore, H(u)(t) has the effect of shifting the phase of the *negative frequency components of u(t) by +90° (π/2 radians) and the phase of the positive frequency components by −90°.

And i·H(u)(t) has the effect of restoring the positive frequency components while shifting the negative frequency ones an additional +90°, resulting in their negation.

In electrical engineering, the convolution of one function (the input signal) with a second function (the impulse response) gives the output of a linear time-invariant system (LTI).

At any given moment, the output is an accumulated effect of all the prior values of the input function

Differential equations[]

- See also: *Variation of parameters

Simple harmonic motion shown both in real space and *phase space.

*Simple harmonic motion of a mass on a spring is a second-order linear ordinary differential equation.

where m is the inertial mass, x is its displacement from the equilibrium, and k is the spring constant.

Solving for x produces

A is the amplitude (maximum displacement from the equilibrium position), is the angular frequency, and φ is the phase.

Energy passes back and forth between the potential energy in the spring and the kinetic energy of the mass.

The important thing to note here is that the frequency of the oscillation depends only on the mass and the stiffness of the spring and is totally independent of the amplitude.

That is the defining characteristic of resonance.

RLC series circuit

*Kirchhoff's voltage law states that the sum of the emfs in any closed loop of any electronic circuit is equal to the sum of the *voltage drops in that loop.[16]

V is the voltage, R is the resistance, L is the inductance, C is the capacitance.

I = dQ/dt is the current.

It makes no difference whether the current is a small number of charges moving very fast or a large number of charges moving slowly.

In reality *the latter is the case.

*Damping oscillation is a typical *transient response

If V(t)=0 then the only solution to the equation is the transient response which is a rapidly decaying sine wave with the same frequency as the resonant frequency of the circuit.

- Like a mass (inductance) on a spring (capacitance) the circuit will resonate at one frequency.

- Energy passes back and forth between the capacitor and the inductor with some loss as it passes through the resistor.

If V(t)=sin(t) from -∞ to +∞ then the only solution is a sine wave with the same frequency as V(t) but with a different amplitude and phase.

If V(t) is zero until t=0 and then equals sin(t) then I(t) will be zero until t=0 after which it will consist of the steady state response plus a transient response.

From Wikipedia:Characteristic equation (calculus):

Starting with a linear homogeneous differential equation with constant coefficients ,

it can be seen that if , each term would be a constant multiple of . This results from the fact that the derivative of the exponential function is a multiple of itself. Therefore, , , and are all multiples. This suggests that certain values of will allow multiples of to sum to zero, thus solving the homogeneous differential equation. In order to solve for , one can substitute and its derivatives into the differential equation to get

Since can never equate to zero, it can be divided out, giving the characteristic equation

By solving for the roots, , in this characteristic equation, one can find the general solution to the differential equation. For example, if is found to equal to 3, then the general solution will be , where is an arbitrary constant.

Partial derivatives[]

- See also: *Currying

*Partial derivatives and *multiple integrals generalize derivatives and integrals to multiple dimensions.

The partial derivative with respect to one variable is found by simply treating all other variables as though they were constants.

Multiple integrals are found the same way.

Let f(x, y, z) be a scalar function (for example electric potential energy or temperature).

- A 2 dimensional example of a scalar function would be an elevation map.

- (Contour lines of an elevation map are an example of a *level set.)

The total derivative of f(x(t), y(t)) with respect to t is[17]

And the differential is

Gradient of scalar field[]

The Gradient of f(x, y, z) is a vector field whose value at each point is a vector (technically its a covector because it has units of distance−1) that points "downhill" with a magnitude equal to the slope of the function at that point.

You can think of it as how much the function changes per unit distance.

The gradient of temperature gives heat flow.

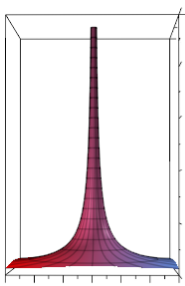

For static (unchanging) fields the Gradient of the electric potential is the electric field itself. Image below shows the potential of a single point charge.

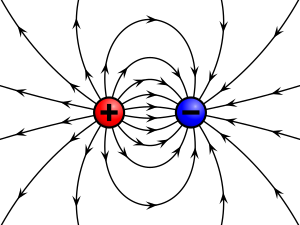

Its gradient gives the electric field which is shown in the 2 images below. In the image on the left the field strength is proportional to the length of the vectors. In the image on the right the field strength is proportional to the density of the *flux lines. The image is 2 dimensional and therefore the flux density in the image follows an inverse first power law but in reality the field lines from a real proton or electron spread outward in 3 dimensions and therefore follow an inverse square law. Inverse square means that at twice the distance the field is four times weaker.

The field of 2 point charges is simply the linear sum of the separate charges.

Divergence[]

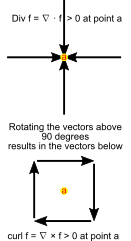

The Divergence of a vector field is a scalar.

The divergence of the electric field is non-zero wherever there is electric charge and zero everywhere else.

*Field lines begin and end at charges because the charges create the electric field.

The Laplacian is the divergence of the gradient of a function:

- *elliptic operators generalize the Laplacian.

Curl[]

- See also: *Biot–Savart law

The Curl of a vector field describes how much the vector field is twisted.

(The field may even go in circles.)

The curl at a certain point of a magnetic field is the current vector at that point because current creates the magnetic field.

In 3 dimensions the dual of the current vector is a bivector.

In 2 dimensions this reduces to a single scalar

The curl of the gradient of any scalar field is always zero.

The curl of a vector field in 4 dimensions would no longer be a vector. It would be a bivector. However the curl of a bivector field in 4 dimensions would still be a vector.

See also: *differential forms.

Gradient of vector field[]

The Gradient of a vector field is a tensor field. Each row is the gradient of the corresponding scalar function:

- Remember that because rotation from y to x is the negative of rotation from x to y.

Partial differential equations can be classified as *parabolic, *hyperbolic and *elliptic.

Green's theorem[]

The line integral along a 2-D vector field is:

|

|

Divergence is zero everywhere except at the origin where a charge is located. A line integral around any of the red circles will give the same answer because all the circles contain the same amount of charge.

You can think of each field line as ending in a single unit of charge.

Green's theorem states that if you want to know how many field lines exit a region then you can either count how many lines cross the boundary (perform a line integral) or you can simply count the number of charges (or the amount of current) within that region. See Divergence theorem.

In 2 dimensions this is

A version of Green's theorem also works for curl.

Green's theorem is perfectly obvious when dealing with vector fields but is much less obvious when applied to complex valued functions in the complex plane.

See also Kelvin–Stokes theorem

The complex plane[]

- Highly recomend: Fundamentals of complex analysis with applications to engineering and science by Saff and Snider

- External link: http://www.solitaryroad.com/c606.html

The formula for the derivative of a complex function f at a point z0 is the same as for a real function:

Every complex function can be written in the form

Because the complex plane is two dimensional, z can approach z0 from an infinite number of different directions.

However, if within a certain region, the function f is holomorphic (that is, complex differentiable) then, within that region, it will only have a single derivative whose value does not depend on the direction in which z approaches z0 despite the fact that fx and fy each have 2 partial derivatives. One in the x and one in the y direction..

|

|

This is only possible if the Cauchy–Riemann conditions are true.

An *entire function, also called an integral function, is a complex-valued function that is holomorphic at all finite points over the whole complex plane.

As with real valued functions, a line integral of a holomorphic function depends only on the starting point and the end point and is totally independant of the path taken.

The starting point and the end point for any loop are the same. This, of course, implies Cauchy's integral theorem for any holomorphic function f:

Therefore curl and divergence must both be zero for a function to be holomorphic.

Green's theorem for functions (not necessarily holomorphic) in the complex plane:

Computing the residue of a monomial[18]

- where is the circle with radius therefore and

The last term in the equation above equals zero when r=0. Since its value is independent of r it must therefore equal zero for all values of r.

Cauchy's integral formula states that the value of a holomorphic function within a disc is determined entirely by the values on the boundary of the disc.

Divergence can be nonzero outside the disc.

Cauchy's integral formula can be generalized to more than two dimensions.

Which gives:

- Note that n does not have to be an integer. See *Fractional calculus.

The Taylor series becomes:

The *Laurent series for a complex function f(z) about a point z0 is given by:

The positive subscripts correspond to a line integral around the outer part of the annulus and the negative subscripts correspond to a line integral around the inner part of the annulus. In reality it makes no difference where the line integral is so both line integrals can be moved until they correspond to the same contour gamma. See also: *Z-transform

The function has poles at z=1 and z=2. It therefore has 3 different Laurent series centered on the origin (z0 = 0):

- For 0 < |z| < 1 the Laurent series has only positive subscripts and is the Taylor series.

- For 1 < |z| < 2 the Laurent series has positive and negative subscripts.

- For 2 < |z| the Laurent series has only negative subscripts.

*Cauchy formula for repeated integration:

For every holomorphic function both fx and fy are harmonic functions.

Any two-dimensional harmonic function is the real part of a complex analytic function.

See also: complex analysis.[19]

- fy is the *harmonic conjugate of fx.

- Geometrically fx and fy are related as having orthogonal trajectories, away from the zeroes of the underlying holomorphic function; the contours on which fx and fy are constant (*equipotentials and *streamlines) cross at right angles.

- In this regard, fx+ify would be the complex potential, where fx is the *potential function and fy is the *stream function.[20]

- fx and fy are both solutions of Laplace's equation so divergence of the gradient is zero

- *Legendre function are solutions to Legendre's differential equation.

- This ordinary differential equation is frequently encountered when solving Laplace's equation (and related partial differential equations) in spherical coordinates.

- A harmonic function is a scalar potential function therefore the curl of the gradient will also be zero.

- Harmonic functions are real analogues to holomorphic functions.

- All harmonic functions are analytic, i.e. they can be locally expressed as power series.

- This is a general fact about *elliptic operators, of which the Laplacian is a major example.

- The value of a harmonic function at any point inside a disk is a *weighted average of the value of the function on the boundary of the disk.

- The *Poisson kernel gives different weight to different points on the boundary except when x=0.

- The value at the center of the disk (x=0) equals the average of the equally weighted values on the boundary. See The_mean_value_property.

- All locally integrable functions satisfying the mean-value property are both infinitely differentiable and harmonic.

- The kernel itself appears to simply be 1/r^n shifted to the point x and multiplied by different constants.

- For a circle (K = Poisson Kernel):

|

|

Next section: Intermediate mathematics/Discrete mathematics

Search Math wiki[]

See also[]

External links[]

- MIT open courseware

- Cheat sheets

- http://mathinsight.org

- https://math.stackexchange.com

- https://www.eng.famu.fsu.edu/~dommelen/quantum/style_a/IV._Supplementary_Informati.html

- http://www.sosmath.com

- https://webhome.phy.duke.edu/~rgb/Class/intro_math_review/intro_math_review/node1.html

- Wikiversity:Mathematics

- w:c:4chan-science:Mathematics

References[]

- ↑ Andrew Marx, Shortcut Algebra I: A Quick and Easy Way to Increase Your Algebra I Knowledge and Test Scores, Publisher Kaplan Publishing, 2007, Template:ISBN, 9781419552885, 288 pages, page 51

- ↑ Wikipedia:Multiplicity (mathematics)

- ↑ Wikipedia:Binomial theorem

- ↑ Wikipedia:Partial fraction decomposition

- ↑ Wikipedia:Basic hypergeometric series

- ↑ Wikipedia:q-analog

- ↑ 7.0 7.1

ex = y = dy/dx

dx = dy/y = 1/y * dy

∫ (1/y)dy = ∫ dx = x = ln(y)

- ↑ Wikipedia:Product rule

- ↑ Wikipedia:Monotonic function

- ↑ Wikipedia:Generalized Fourier series

- ↑ Wikipedia:Spherical harmonics

- ↑ Wikipedia:Inverse Laplace transform

- ↑ Wikipedia:Z-transform

- ↑ http://mathworld.wolfram.com/PoissonKernel.html

- ↑ Wikipedia:Convolution theorem

- ↑ Wikipedia:RLC circuit

- ↑ Wikipedia:Total derivative

- ↑ Wikipedia:Residue (complex analysis)

- ↑ Wikipedia:Potential theory

- ↑ Wikipedia:Harmonic conjugate

![{\displaystyle {\frac {a-b}{a+b}}={\frac {\tan \left[{\tfrac {1}{2}}(A-B)\right]}{\tan \left[{\tfrac {1}{2}}(A+B)\right]}}}](https://services.fandom.com/mathoid-facade/v1/media/math/render/svg/f8db936be4d9538cd12c40fcaee568475e95cdc9)

![{\displaystyle [n]_{q}={\frac {1-q^{n}}{1-q}}=q^{0}+q^{1}+q^{2}+\ldots +q^{n-1}}](https://services.fandom.com/mathoid-facade/v1/media/math/render/svg/fb9e47e30e0c704354349871df771868812717dc)

![{\displaystyle [n]_{q}!=[1]_{q}\cdot [2]_{q}\cdots [n-1]_{q}\cdot [n]_{q}}](https://services.fandom.com/mathoid-facade/v1/media/math/render/svg/818bb45790d158cc1d30ce4dcd6991230c8d354a)

![{\displaystyle f(t)={\frac {a_{0}}{2}}+\sum _{n=1}^{\infty }\left[a_{n}\cos \left(nt\right)+b_{n}\sin \left(nt\right)\right]}](https://services.fandom.com/mathoid-facade/v1/media/math/render/svg/1a450ccc2b5e13c661319b731802322df1f73f08)

![{\displaystyle \int (V_{1}\cdot dx+V_{2}\cdot dy)=\int _{a}^{b}{\bigg [}V_{1}(x(t),y(t)){\frac {dx}{dt}}+V_{2}(x(t),y(t)){\frac {dy}{dt}}{\bigg ]}dt}](https://services.fandom.com/mathoid-facade/v1/media/math/render/svg/08c2821419532739c4dee7c0d0191291f0bc51e8)

=\int _{S}u(\zeta )P(x,\zeta )d\sigma (\zeta ).\,}](https://services.fandom.com/mathoid-facade/v1/media/math/render/svg/b8c59eefdc8ed15382e23a438c72e9cd4be5ab49)